The content of this introduction paper is based on the article "Quality Testing in Aluminum Die-Casting – A Novel Approach Using Acoustic Data in Neural Networks" published by "Athens Journal of Sciences".

1. Overview:

- Title: Quality Testing in Aluminum Die-Casting – A Novel Approach Using Acoustic Data in Neural Networks

- Author: Manfred Rössle & Stefan Pohl

- Year of publication: 2024

- Journal/academic society of publication: Athens Journal of Sciences

- Keywords: acoustic quality control, aluminum die casting, convolutional neural networks, sound data

2. Abstract:

In quality control of aluminum die casting various processes are used. For example, the density of the parts can be measured, X-ray images or images from the computed tomography are analyzed. All common processes lead to practically usable results. However, the problem arises that none of the processes is suitable for inline quality control due to their time duration and to their costs of hardware. Therefore, a concept for a fast and low-cost quality control process using sound samples is presented here. Sound samples of 240 aluminum castings are recorded and checked for their quality using X-ray images. All parts are divided into the categories "good" without defects, "medium" with air inclusions ("blowholes") and "poor" with cold flow marks. For the processing of the generated sound samples, a Convolutional Neuronal Network was developed. The training of the neural network was performed with both complete and segmented sound samples ("windowing"). The generated models have been evaluated with a test data set consisting of 120 sound samples. The results are very promising. Both models show an accuracy of 95% and 87% percent, respectively. The results show that a new process of acoustic quality control can be realized using a neural network. The model classifies most of the aluminum castings into the correct categories.

3. Introduction:

Fast and cost-efficient quality control is pivotal in manufacturing. Modern methods, including artificial intelligence and neural networks, offer new possibilities. Traditional quality assurance for aluminum castings, such as computed tomography (CT) and X-ray analysis, effectively detects defects like air pockets ("blowholes") or cracks. However, these methods are often time-consuming (e.g., 20-30 minutes for CT) compared to typical process times (approx. 30 seconds per piece), rendering them unsuitable for inline process control. This study explores the viability of using sound data processed by neural networks as a fast, low-cost, inline-capable quality assurance method. The underlying hypothesis is that manufacturing defects alter the density of castings, thereby changing their acoustic properties (sound and frequencies), which can be identified by a neural network.

4. Summary of the study:

Background of the research topic:

Quality control in aluminum die-casting relies on methods like density measurement, X-ray imaging, and computed tomography. While effective, these methods face limitations regarding speed and cost, hindering their application for inline quality control during production.

Status of previous research:

Significant progress exists in audio data processing using neural networks for applications like speech, music, and pattern recognition. Techniques involve processing raw audio or image representations like spectrograms or Mel Frequency Cepstral Coefficients (MFCC), often using Convolutional Neural Networks (CNNs). While specific research on acoustic quality assurance for aluminum die-castings using neural networks is lacking, related work includes automated casting quality control using X-ray images and CNNs, and acoustic quality control for other items like welds, ceramic tiles, and gearboxes.

Purpose of the study:

The study aims to investigate the feasibility of establishing a novel, fast, and low-cost inline quality control process for aluminum die-castings by analyzing acoustic data with neural networks. The core idea is that defects altering material density will produce distinct sound patterns detectable by a trained network.

Core study:

The research involved recording sound samples from 240 aluminum castings, previously classified via X-ray into "good" (no defects), "medium" (air inclusions/"blowholes"), and "poor" (cold flow marks) categories (80 parts each). Sound was generated by striking the parts with a pendulum under controlled conditions. A Convolutional Neural Network (CNN) was developed, inspired by existing architectures (e.g., VGG Net). The network was trained using Mel-Frequency Cepstral Coefficients (MFCC) derived from the sound samples. Two training approaches were compared: using the complete sound samples and using segmented sound samples ("windowing") to artificially increase the dataset size. The trained models were evaluated on a separate test dataset.

5. Research Methodology

Research Design:

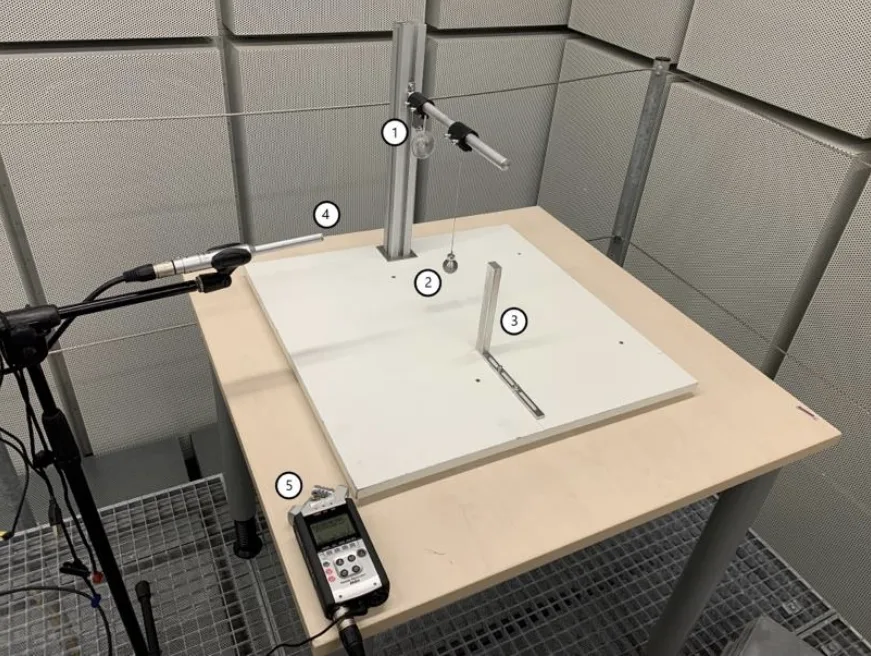

An experimental design was employed using 240 aluminum castings produced with specific, "extreme" parameters to ensure distinct quality categories. Sound samples were recorded in a controlled environment (soundproof room). A custom holding fixture and pendulum setup (Figure 1) ensured consistency. A CNN architecture was designed based on established practices in audio classification. Two training strategies (complete vs. segmented samples) were implemented and evaluated using 10-fold cross-validation.

Data Collection and Analysis Methods:

- Data Source: 240 aluminum castings (19.8 x 14.8 x 0.4 cm) from Aalen University foundry, categorized ("good", "medium", "poor") by die-casting experts using X-ray images.

- Sound Recording: Performed in a soundproof room using a Zoom Handy Recorder H4n and Behringer ECM8000 microphone. Recordings were in WAV format (96 kHz, 24 bits, mono). Two samples were taken per casting (total 480 samples).

- Preprocessing: Sound files were cut to remove initial/final silence using a threshold, standardized to 5 seconds length, and resampled from 96 kHz to 16 kHz.

- Feature Extraction: Mel-Frequency Cepstral Coefficients (MFCC) were computed from the preprocessed audio data.

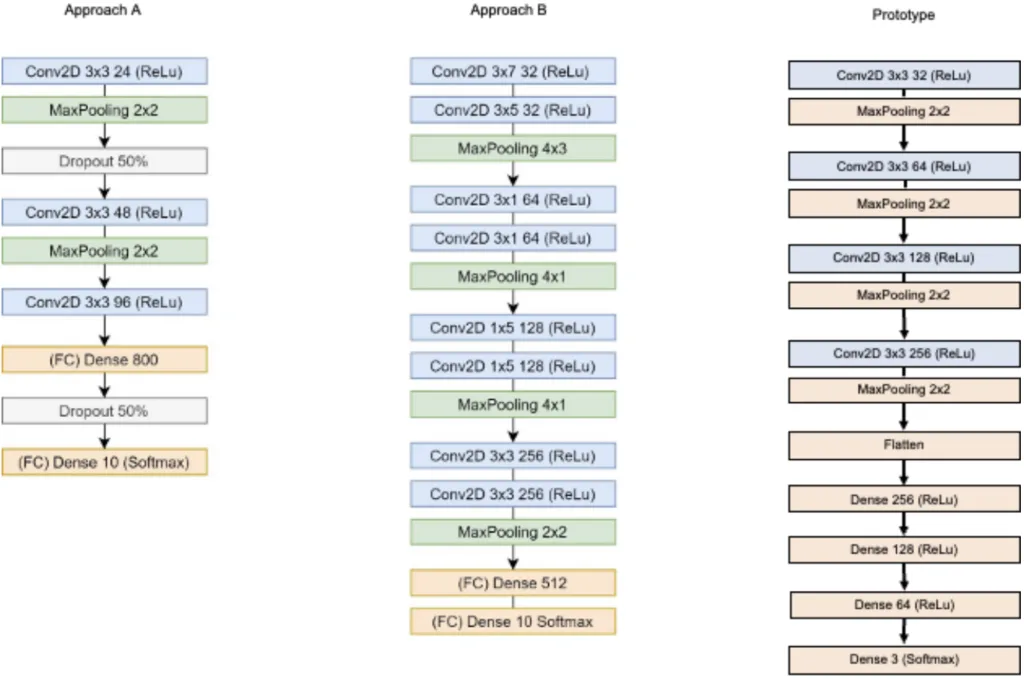

- Model: A Convolutional Neural Network (CNN) prototype was developed (Figure 2, Prototype), featuring four blocks of Conv2D (3x3 filters, doubling from 32 to 256) and MaxPooling (2x2), followed by a Flatten layer and four Dense layers (256, 128, 64, 3 units), using ReLu activation except for the final Softmax layer.

- Training: Performed using Python libraries (presumably Keras/TensorFlow, though not explicitly stated). Two methods:

- Complete samples: MFCCs from entire 5-second samples (360 training samples).

- Segmented samples: MFCCs from random 2-second segments, repeated 20,000 times for data augmentation.

Training used 10-fold cross-validation on a 75% training split (360 samples), with the remaining 25% (120 samples) as the test set. Parameters included Categorical Crossentropy loss, Adam optimizer, and Accuracy metric (Table 2).

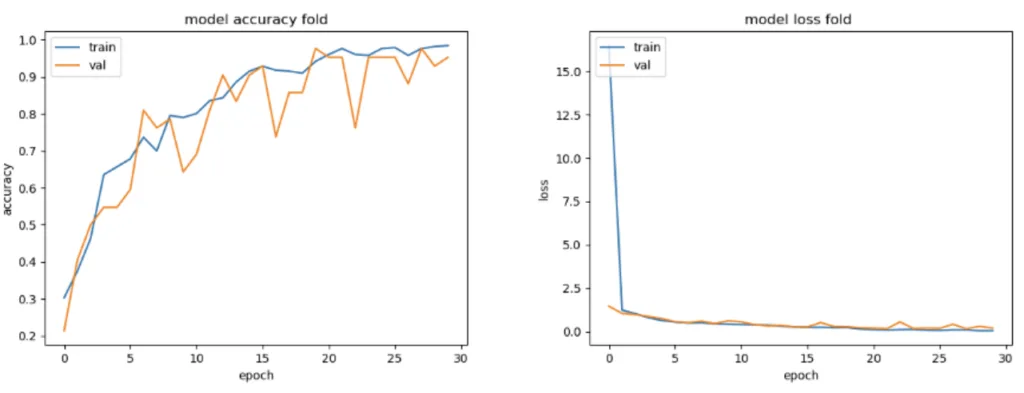

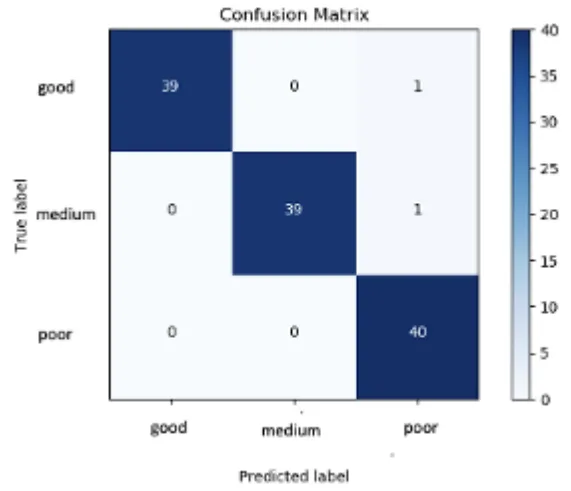

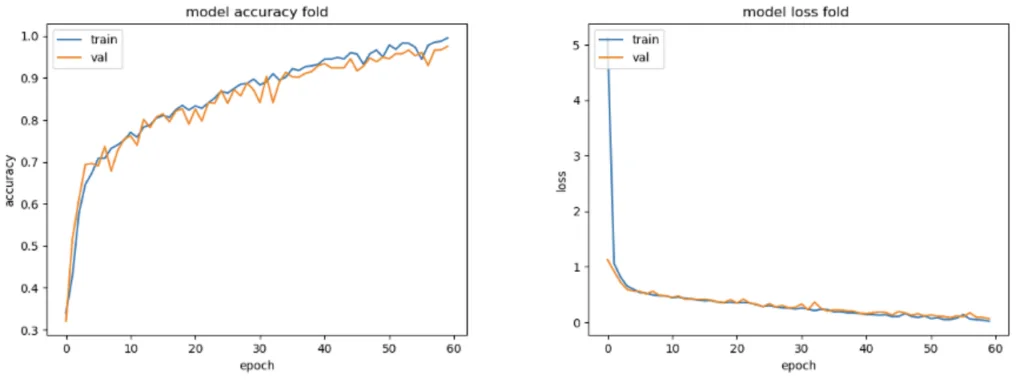

- Evaluation: Model performance was assessed using Accuracy and Loss curves during training (Figure 3, Figure 5) and confusion matrices (Figure 4, Figure 6) on the test set. Probability values for classification were analyzed (Table 4).

Research Topics and Scope:

The research scope covers the acquisition of acoustic data from aluminum die-castings of varying quality, preprocessing of this data, extraction of MFCC features, development and training of a CNN model for classification, and evaluation of the model's ability to distinguish between "good", "medium", and "poor" quality castings based on sound. The study compares the effectiveness of training with complete versus segmented sound samples.

6. Key Results:

Key Results:

- The proposed acoustic quality control method using a CNN demonstrated promising results.

- The model trained with complete sound samples achieved an accuracy of 95% on the test dataset (120 samples). The confusion matrix (Figure 4) showed only two misclassifications: one "good" and one "medium" sample were classified as "poor".

- The model trained with segmented sound samples achieved an average accuracy of 87% (best model 90%, worst 86% across 10 folds, Table 3) on the test dataset. The confusion matrix (Figure 6) indicated more misclassifications, particularly assigning "good" and "medium" samples incorrectly.

- Analysis of training curves (Accuracy and Loss, Figure 3 & 5) suggested optimal training epochs (30 for complete, 60 for segmented) and did not indicate significant overfitting for either method under the tested conditions.

- Setting a classification probability threshold (e.g., 80%) to ensure unambiguous classification increased the number of misclassified samples significantly for both models.

- The study successfully demonstrated the potential for differentiating casting quality based on acoustic signatures processed by a CNN.

Figure Name List:

- Figure 1. Design of Experiment

- Figure 2. Architectures of CNNs

- Figure 3. Model Accuracy and Loss for Complete Sound Samples

- Figure 4. Confusion Matrix for Complete Sound Samples

- Figure 5. Model Accuracy and Loss for Segmented Sound Samples

- Figure 6. Confusion Matrix for Segmented Sound Samples

7. Conclusion:

The obtained results indicate that a new inline quality assurance process utilizing sound data processing in neural networks is fundamentally feasible for aluminum die-castings. The approach successfully classified parts into quality categories based on their acoustic response. However, the study highlights limitations, including the small dataset size, the use of parts cast with extreme parameters (not representing the "grey area" common in production), the simple geometry of the test parts, and the controlled laboratory environment lacking typical manufacturing noise. Further investigations are necessary to address these limitations, validate the approach with larger datasets and more complex parts, and assess its robustness in a real-world manufacturing environment before practical implementation.

8. References:

- Abdoli S, Cardinal P, Lameiras Koerich A (2019) End-to-end environmental sound classification using a 1D convolutional neural network. Expert Systems with Applications 136(Dec): 252–263.

- Boddapati V, Petef A, Rasmusson J, Lundberg L (2017) Classifying environmental sounds using image recognition networks. Procedia Computer Science 112: 2048–2056.

- Costa YMG, Oliveira LS, Silla CN (2017) An evaluation of Convolutional Neural Networks for music classification using spectrograms. Applied Soft Computing 52(C): 28–38.

- Cunha R, Medeiros De Araujo G, Maciel R, Nandi GS, Da-Ros MR, et al. (2018) Applying non-destructive testing and machine learning to ceramic tile quality control. In SBESC 2018. 2018 VIII Brazilian Symposium on Computing Systems Engineering: Proceedings, 54–61. Salvador, Brazil, November 6-9, 2018. Los Alamitos, CA: Conference Publishing Services, IEEE Computer Society.

- Gulli A (2017) Deep learning with Keras. Implement neural networks with Keras on Theano and TensorFlow. Birmingham, UK: Packt Publishing.

- Hassan SU, Zeeshan Khan M, Ghani Khan MU, Saleem S (2019) Robust sound classification for surveillance using time frequency audio features. In 2019 International Conference on Communication Technologies (ComTech), 13–18. 20-21 March, 2019, Military College of Signals, National University of Sciences & Technology. Piscataway, New Jersey: IEEE.

- Huzaifah M (2017) Comparison of time-frequency representations for environmental sound classification using convolutional neural networks. Available at: https://arxiv.org/pdf/1706.07156.

- Jing L, Zhao M, Li P, Xu X (2017) A convolutional neural network based feature learning and fault diagnosis method for the condition monitoring of gearbox. Measurement 111(Dec): 1-10.

- Khamparia A, Gupta D, Nguyen NG, Khanna A, Pandey B, Tiwari P (2019) Sound classification using convolutional neural network and tensor deep stacking network. IEEE Access 7(Jan): 7717–7727.

- Kong Z, Tang B, Deng L, Liu W, Han Y (2020) Condition monitoring of wind turbines based on spatiotemporal fusion of SCADA data by convolutional neural networks and gated recurrent units. Renewable Energy 146(Feb): 760–768.

- Kothuru A, Nooka SP, Liu R (2019) Application of deep visualization in CNN-based tool condition monitoring for end milling. Procedia Manufacturing 34: 995–1004.

- Krizhevsky A, Sutskever I, Hinton GE (2017) ImageNet classification with deep convolutional neural networks. Communications of the ACM 60(6): 84–90.

- Lai J-H, Liu C-L, Chen X, Zhou J, Tan T, Zheng N, et al. (2018) Pattern Recognition and Computer Vision. Cham: Springer International Publishing.

- Lv N, Xu Y, Li S, Yu X, Chen S (2017) Automated control of welding penetration based on audio sensing technology. Journal of Materials Processing Technology 250: 81–98.

- Mery D (2020) Aluminum casting inspection using deep learning: a method based on convolutional neural networks. Journal of Nondestructive Evaluation 39(1): 1–13.

- Moolayil J (2019) Learn keras for deep neural networks. Berkeley, CA: Apress.

- Nguyen TP, Choi S, Park S-J, Park SH, Yoon J (2020) Inspecting method for defective casting products with convolutional neural network (CNN). International Journal of Precision Engineering and Manufacturing-Green Technology 8(Feb): 583–594.

- Nie J-Y, Obradovic Z, Suzumura T, Ghosh R, Nambiar R, Wang C (Eds.) (2017) 2017 IEEE International Conference on Big Data. Dec 11-14, 2017, Boston, MA, USA: Proceedings. Piscataway, NJ: IEEE.

- Olson DL, Delen D (2008) advanced data mining techniques. Berlin, Heidelberg: Springer-Verlag Berlin Heidelberg.

- Piczak KJ (2015) Environmental sound classification with convolutional neural networks. In D Erdoğmuş (ed.), 2015 IEEE 25th International Workshop on Machine Learning for Signal Processing (MLSP), 1–6. 17-20 Sept. 2015, Boston, USA. Piscataway, NJ: IEEE.

- Przybył K, Duda A, Koszela K, Stangierski J, Polarczyk M, Gierz L (2020) Classification of Dried Strawberry by the Analysis of the Acoustic Sound with Artificial Neural Networks. Sensors (Basel, Switzerland) 20(2): 499.

- Purwins H, Li B, Virtanen T, Schluter J, Chang S-Y, Sainath T (2019) Deep learning for audio signal processing. IEEE Journal of Selected Topics in Signal Processing 13(2): 206-219.

- Salamon J, Bello JP (2017) Deep convolutional neural networks and data augmentation for environmental sound classification. IEEE Signal Processing Letters 24(3): 279–283.

- Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. https://arxiv.org/pdf/1409.1556

- Voith (2020) OnCare. Available at: http://voith.com/corp-de/products-services/automation-digit al-solutions/oncare.html?

- Wani MA, Bhat FA, Afzal S, Khan AI (2020) Advances in deep learning. Singapore: Springer.

- Yuji Tokozume TH (2017) Learning environmental sounds with end-to-end convolutional neural network. IEEE.

9. Copyright:

- This material is a summary of a paper by "Manfred Rössle & Stefan Pohl". Based on "Quality Testing in Aluminum Die-Casting – A Novel Approach Using Acoustic Data in Neural Networks".

- Source of the paper: https://doi.org/10.30958/ajs.X-Y-Z

This material is summarized based on the above paper, and unauthorized use for commercial purposes is prohibited.

Copyright © 2025 CASTMAN. All rights reserved.